Overview

Dantzig's simplex method is used to solve the following linear programming problem.

Minimize $z = \mathbf c^{\mathrm T} \mathbf x$

Subject to $A\mathbf x \leq \mathbf b$ and $\mathbf x \geq 0$

where $\mathbf c = \left(c_1, \ldots, c_n\right)$ are the coefficients of the objective function.

$\left(\cdot\right)^{\mathrm T}$ is the matrix transpose.

$\mathbf x = \left(x_1, \ldots, x_n\right)$ are the decision variables.

$\mathbf b = \left(b_1, \ldots, b_m\right)$ are the right-hand side values.

$A$ is an $m \times n$ matrix.

Basic Feasible Solution (BFS)

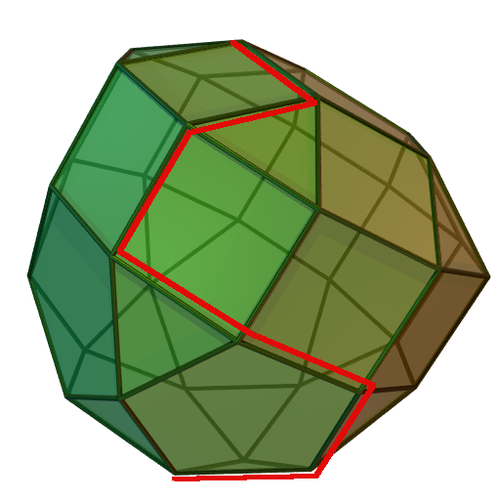

We can treat each constraint $A_i \cdot \mathbf x \leq b_i$ as a half-space. The intersection of all these half-spaces forms a (possibly unbounded) convex polytope. All points within this polytope satisfies $A \mathbf x \leq \mathbf b$. We call an extreme point of this polytope a BFS.

Here is an illustration from Wikipedia.

We can treat the objective function as a hyperplane as well, and corollary: the minimum is always at the extreme point of tangency between this half-hyperplane and the polytope.

Note that the number of extreme points are finite.

Slack Form

For an inequality $a \leq b$, it is equivalent to $a + s = b$, where $s \geq 0$.

Hence, we can add slack variables to transform inequalities into equalities: $A \mathbf x + \mathbf s = \mathbf b$.

The advantage is $\mathbf s = \mathbf b$ and $\mathbf x = 0$ is always a basic solution. Note that it may not be a basic feasible solution, since it is not guaranteed that $\mathbf b \geq 0$. We will talk about how to find a BFS in any constraints in the last chapter.

Basis

Recall the corollary in chapter BFS: the minimum is always at an extreme point.

A point is an extreme point if and only if there are exactly $n$ variables whose values are $0$ among $\mathbf x$ and $\mathbf s$. We call these variables non-basic variables, and other non-zero variables basic variables. The set of basic variables is called a basis.

The simplex method is to replace a basic variable with a non-basic variable repeatedly by pivoting.

Pivoting

Assume we have a BFS now, then how can we make our answer more optimal?

Let $\mathcal B$ denote the set of all basic variables, and $\mathcal N$ denote the set of all non-basic variables.

$x_{\mathcal B}$ can be represented using $x_{\mathcal N}$:

$$ \begin{aligned} A_{\mathcal B} \cdot \mathbf x_{\mathcal B} + A_{\mathcal N} \cdot \mathbf x_{\mathcal N} &= \mathbf b \\ A_{\mathcal B} \cdot \mathbf x_{\mathcal B} &= \mathbf b - A_{\mathcal N} \cdot \mathbf x_{\mathcal N} \\ \mathbf x_{\mathcal B} &= A_{\mathcal B}^{-1} \cdot \mathbf b - A_{\mathcal B}^{-1} \cdot A_{\mathcal N} \cdot \mathbf x_{\mathcal N} \end{aligned} $$

Due to the basis linear independence, the inverse of the matrix $A_{\mathcal B}^{-1}$ always exists.

And the objective function:

$$ \begin{aligned} \mathbf c^{\mathrm T}\mathbf x &= \mathbf c_{\mathcal B}^{\mathrm T}\mathbf x_{\mathcal B} + \mathbf c_{\mathcal N}^{\mathrm T}\mathbf x_{\mathcal N} \\ &= \mathbf c_{\mathcal B}^{\mathrm T}A_{\mathcal B}^{-1} \mathbf b - \mathbf x_{\mathcal N}\left(\mathbf c_{\mathcal N}^{\mathrm T} - \mathbf c_{\mathcal B}^{\mathrm T}A_{\mathcal B}^{-1} A_{\mathcal N}\right) \end{aligned} $$

Because $\mathbf x_{\mathcal N} = 0$, we have $\mathbf c^{\mathrm T}\mathbf x = \mathbf c_{\mathcal B}^{\mathrm T}A_{\mathcal B}^{-1} \mathbf b$.

Take the partial derivative of $\mathbf x_{\mathcal N}$:

$$ \tilde{\mathbf c}_{\mathcal N} = \frac{\partial z}{\partial \mathbf x_{\mathcal N}} = \mathbf c_{\mathcal N} - A_{\mathcal N}^{\mathrm T}\left(A_{\mathcal B}^{-1}\right)^{\mathrm T}\mathbf c_{\mathcal B} $$

Notice that $\tilde{\mathbf c}_{\mathcal B} = 0$, because $\mathbf x_{\mathcal B}$ is determined by $\mathbf x_{\mathcal N}$, and the effect caused by the change of $x_{\mathcal B}$ is captured in $\tilde{\mathbf c}_{\mathcal N}$. Furthermore,

$$ \tilde{\mathbf c} = \left(\tilde{\mathbf c}_{\mathcal N}^{\mathrm T}, \tilde{\mathbf c}_{\mathcal B}^{\mathrm T}\right)^{\mathrm T} = \mathbf c - A^{\mathrm T}\left(A_{\mathcal B}^{-1}\right)^{\mathrm T}\mathbf c_{\mathcal B} $$

is the reduced cost of BFS $\mathbf x$. $\tilde c_i < 0$ means increasing $\mathbf x_i$ will decrease the answer, and such a variable must be a non-basic variable. We use the pivot operation to make it a basic variable.

Substitute $\mathbf x_{\mathcal N} = \left(x_i, \mathbf x_{\mathcal N \setminus \left\{i\right\}}\right)$ into the expression of $\mathbf x_{\mathcal B}$:

$$ \mathbf x_{\mathcal B} = A_{\mathcal B}^{-1}\mathbf b - A_{\mathcal B}^{-1}A_ix_i $$

Hence

$$ \max\Delta_{x_i} = \min_j\left\{\frac{\left(A_{\mathcal B}^{-1}\mathbf b\right)_j}{\left(A_{\mathcal B}^{-1}A_{\mathcal i}\right)_j} : \left(A_{\mathcal B}^{-1}A_{\mathcal i}\right)_j > 0\right\} $$

When $\tilde{\mathbf c} \geq 0$, the algorithm ends.

Duality

Suppose we want to prove the answer we have found is indeed minimal, we can formulate a dual problem.

Maximize $\mathbf b^{\mathrm T}\mathbf y$

Subject to $A^{\mathrm T}\mathbf y \geq \mathbf c$ and $\mathbf y \geq 0$

According to the strong duality theorem, at optimality we have $\mathbf c^{\mathrm T} \mathbf x = \mathbf b^{\mathrm T} \mathbf y$.

The Two-Phase Method

If $\mathbf b \geq 0$ is not guaranteed, how can we find a BFS?

Recall our original problem: $A \mathbf x + \mathbf s = \mathbf b$.

Phase I:

Transform the problem into:

Minimize $1^{\mathbf T}\mathbf a$

Subject to $A\mathbf x + \mathbf s + \mathbf a = \mathbf b$ and $\mathbf x \geq 0$ and $\mathbf a \geq 0$.

Now, we can give a BFS as follows:

For all $i$ such that $b_i < 0$, flip the signs of all $A_{i, j}$, $s_i$ and $b_i$ (i.e. $-A_i - s_i + a_i = -b_i$), so that $\mathbf b \geq 0$.

- $\mathbf x = 0$

- if original $b_i \geq 0$, then $s_i = b_i$ and $a_i = 0$; otherwise, $s_i = 0$ and $a_i = b_i$.

Therefore, $\mathbf x \geq 0$, $\mathbf s \geq 0$, and $\mathbf a \geq 0$ are all guaranteed.

Run Dantzig's simplex method.

If $\mathbf a = 0$, then we remove $\mathbf a$ from the original expression: $A\mathbf x + \mathbf s = \mathbf b$, and the current $\mathbf x$ and $\mathbf s$ is a BFS, just run simplex method again.

Otherwise, no BFS exist. In other words, the problem has no feasible solution.

The Big $M$ Method

The two-phase method can be accomplished using merely one simplex method:

Minimize $M \cdot 1^{\mathbf T}\mathbf a + \mathbf c^{\mathrm T} \mathbf x$

Subject to the same constraints as the two-phase method.

Where $M$ is a sufficiently large number.

Reference

https://en.wikipedia.org/wiki/Simplex_algorithm

https://dl.acm.org/doi/10.1145/87252.88081

http://www.lokminglui.com/lpch3.pdf

https://oi-wiki.org/math/simplex/

https://www.youtube.com/watch?v=E72DWgKP_1Y

https://www.youtube.com/watch?v=_wnqe5_CLU0

https://www.youtube.com/watch?v=rXhvm6jt2YY

https://www.youtube.com/watch?v=btjxqq-vMOg

- Category: 学习笔记

- Last update: 2025-10-08 03:14:57UTC+8

- Tags: Matrix Math Linear Programming

杨永昌 · 2025-11-25 16:13

好久不见,其实有时候也不能太过忧心。人的情感就像河流,只有在河边才能看清。不要忘了elegia的话。

我最难以渡过的时间甚至不在进集训队之前, 而是在第一次进集训队和第二次之间. 可能是这个时候我才逐渐开始触碰到单凭着这些信念还不足以跨越的事物. 就像是之前提到的, 第一次摸到自己面前有一堵墙. 或许人第一次面对这样的墙的时候, 没有经验, 难免情绪激动, 感到失望绝望. 也是在这个时候我被安利了 "约翰克里斯朵夫", 导致症状有所好转. 那句安慰我的话是如此说的:

你得对着这新来的日子抱着虔敬的心. 别想什么一年十年以后的事. 你得想到今天. 把你的理论统统丢开. 所有的理论, 哪怕是关于道德的, 都是不好的, 愚蠢的, 对人有害的. 别用暴力去挤逼人生. 先过了今天再说. 对每一天都得抱着虔诚的态度. 得爱它, 尊敬它, 尤其不能污辱它, 妨害它的发荣滋长. 便是像今天这样灰暗愁闷的日子, 你也得爱. 你不用焦心. 你先看着. 现在是冬天, 一切都睡着. 将来大地会醒过来的. 你只要跟大地一样, 像它那样的有耐性就是了. 你得虔诚, 你得等待. 如果你是好的, 一切都会顺当的. 如果你不行, 如果你是弱者, 如果你不成功, 你还是应当快乐. 因为那表示你不能再进一步. 干吗你要抱更多的希望呢? 干吗为了你做不到的事悲伤呢? 一个人应当做他能做的事. ...... Als ich kann (竭尽所能).